Last week there was a question that I received and the user was seeing an error message for the HA in the cluster.

The number of heartbeat datastores for host is 1 which is less than required 2

Before we move further, I just wanted to clarify a few things about the error message.

You would see this error message only in vCenter Server 5.x and above. The Datastore heartbeat is what the HA master node uses to differentiate between a failure of the host and an isolation of the host in the cluster.

There is also a Network heartbeat which is used by the HA to isolate the host in the cluster.

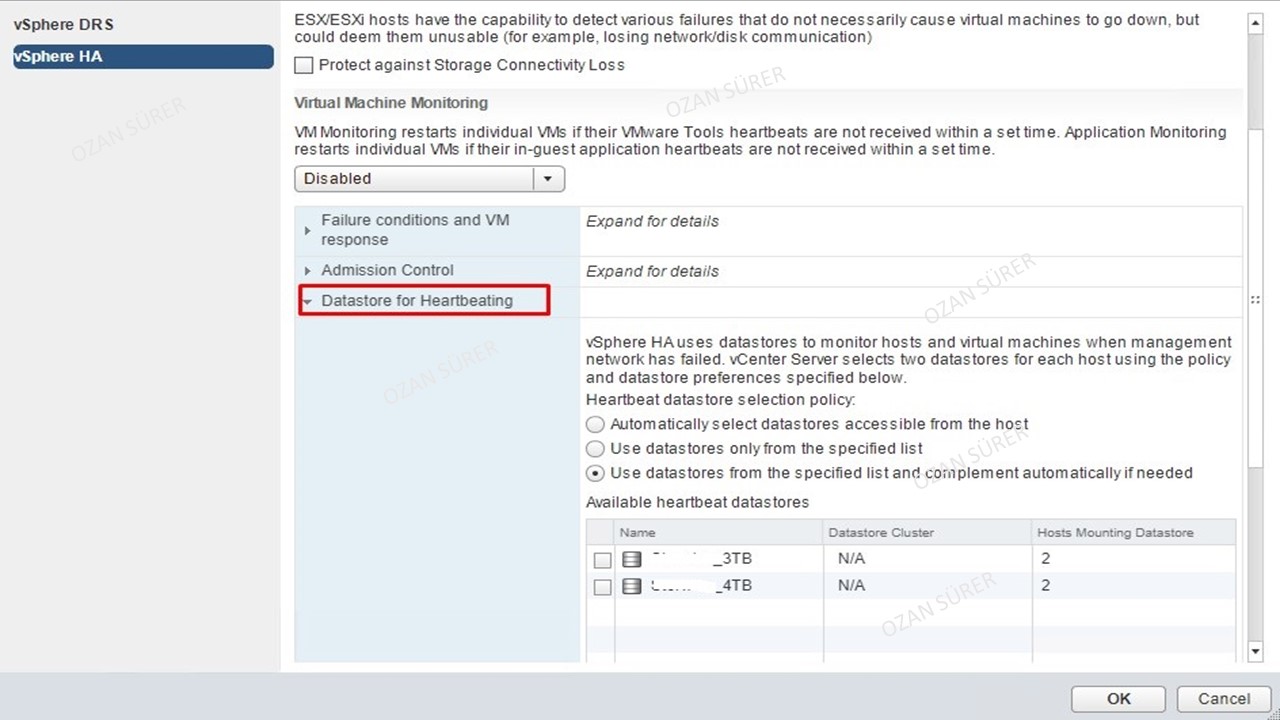

HA is working great and is a great feature, but lets take a look at what happens if the Management network were to fail. Without datastore heartbeats involved, the two hosts wouldn’t be able to communicate with each other over the network so the two of them would assume that the other was failed. RIght now I get the warning: 'The number of heartbeat HA datastores for this host is 1, which is less than the required:2. I pretty much adopted this systemand I am new to hyperflex. For the life of me I cannot find HA configured, I looked at the top level in vCenter, clicked configure and then HA, it looks like it is not configured.

If both the network heartbeat and the datastore heartbeat is missing, then the host will be marked as dead and the VMs will be restarted on other hosts in the cluster.

Getting back to our original error message, you would ideally receive this message if there are less than two datastores used for heartbeat mechanism in the HA cluster.

By default, you need to have two datastores that can participate in the heartbeat mechanism for redundancy purposes.

There are two ways to get rid of this message:

Vsphere Ha Heartbeat Datastores Is 0

- Add another datastore to the hosts that are part of the HA cluster.

- Change an advanced setting in HA to not show the error message even if there is only one datastore.

I highly recommend choosing the first option, the reason being that you would redundancy if there is an issue with one of your datastores.

Under some circumstances, you might want to use the second option, which is changing an advanced setting in the HA cluster.

If you are using a lab and do not sufficient resources to create another datastore or there is a specific requirement wherein you are requested to use only one datastore for the heartbeat mechanism.

The advanced setting that I am referring to is das.ignoreInsufficientHbDatastore. This set to false by default.

The below procedure will help you change the value to TRUE so that the error message will not be reported on the hosts.

Below are the steps that can be used to change the setting:

Vsphere Ha Heartbeat Datastores

- Log into vCenter Server.

- Right-click the cluster and click Edit Settings.

- Click vSphere HA > Advanced Options.

- Under Option, add an entry for das.ignoreInsufficientHbDatastore.

- Under Value, type true.

- Click OK to save the settings.

Once you save the setting, right click on the ESXi host in the cluster that was reporting the message and click on Reconfigure for vSphere HA.

Once the HA is reconfigured, you should no longer see the warning message on the host.

Remove Vsphere Ha Heartbeat Datastores

I hope this has been informative and thank you for reading!

You’d be surprised how many times I see datastore that’s just been un-presented from hosts rather than decommissioned correctly – in one notable case I saw a distributed switch crippled for a whole cluster because the datastore in question was being used to store the VDS configuration.

This is the process that I follow to ensure datastores are decommissioned without any issues – they need to comply with these requirements

- No virtual machine resides on the datastore

- The datastore is not part of a Datastore Cluster

- The datastore is not managed by storage DRS

- Storage I/O control is disabled for this datastore

- The datastore is not used for vSphere HA heartbeat

Check the datastore for requirements

Datastore Clusters and Storage DRS

If the datastore is part of a Datastore Cluster then you can simply drag it out of the cluster to remove it. Once outside of the cluster Storage DRS will be disabled since you can’t use SDRS without being part of a cluster. Not sure why the requirements state both of these, but that sorts “The datastore is not part of a Datastore Cluster” and “The datastore is not managed by storage DRS”

Storage I/O Control

If Storage I/O is enabled you’ll see a folder with the LUN ID in the root of the datastore:

Storage I/O Control can be manually enabled on a datastore without actually using SDRS or Datastore Clusters, so check that it is disabled by selecting the datastore in the Datastores and Datastore Clusters and going to the Configuration tab. Click properties to open the properties of the datastore and you’ll see the Storage I/O Control checkbox. Make sure it’s un-ticked and this will take care of the “Storage I/O control is disabled for this datastore” pre-requisite.

vSphere HA Heartbeat and dvsData

If you right-click and browse a datastore to be decommissioned you can see that there are potentially several components stored on a datastore, in my screenshot below “.dvsData” is the Distributed Switch configuration data (for more detail see http://www.virtual-blog.com/2010/07/what-is-the-purpose-of-dvsdata/), “.vSphere-HA” contains HA configuration (for more detail see http://www.vladan.fr/what-is-vmware-vsphere-ha-folder-for/).

The Number Of Vsphere Ha Heartbeat Datastores

In the case of vSphere HA it will depend on your clusters’ settings (vSphere HA > Datastore Heartbeating) as to which datastores it uses – if you manually select datastores using “Select only my preferred datastores” then you need to go in and select new datastores. As far as I can tell the only way you will fail the “The datastore is not used for vSphere HA heartbeat” check is if you manually specify only 2 datastores then try and decommission one of them. Personally, I’ve yet to see a really good argument for specifying datastores to be used with HA.

The .vdsData folder is created on any VMFS store that has a Virtual Machine on it that also participates in the VDS – so by migrating your VMs off the datastore you’ll be ensuring the configuration data is elsewhere.

VMs and Templates

You can also see in my screenshot below a template folder and two virtual machines that haven’t been migrated across to a new datastore. An easy step is to migrate the VMs and move the template – pretty standard Storage vMotions. That qualifies you for the “No virtual machine resides on the datastore” pre-requisite. VMs or Templates that have been removed from Inventory will not block an unmount of a datastore - so move it or lose it!.

Unmounting the Datastore

Once the datastore is fully evacuated you can browse to “Datastores and Datastore Clusters”, right click the datastore and select Unmount

The wizard then presents you with a list of hosts that can access the datastore, and the option to remove access from some or all of them. This datastore needs to be decommissioned, so remove access from all hosts:

Ensure you’ve got all the green ticks for the re-requisites

Finish the wizard and you should see the unmount tasks complete on each host that can see the datastore:

And the datastore is marked as inactive:

Detaching the unmounted datastores

This can be a bit of a manual process, going to each host in turn and detaching the storage device that backs the datastore (select the host > Configuration > Storage > Devices > Select device and right click > Detach). Or you could download @alanrenouf’s PowerCLI script to help make it a bit easier! It’s available here - https://communities.vmware.com/docs/DOC-18008.

Using the Detach-Datastore function is dead easy:

Get-Datastore “Datastore Name” | Detach-Datastore

(You can ignore the DEBUG messages – you won’t see them unless you have set $DebugPreference=”Continue”)

Once this is done it’s now safe to un-present the LUNs from your hosts! Thanks for reading and I hope it saves you some time and hassle!